SmokeyDope

- 8 Posts

- 40 Comments

“Dyson Spheres? Look, playing with sunlight and mirrors was a fun side project, but you want to know a much more advanced method of generating power?”

“Please dont…”

“Thats right! By hurling entire water worlds into a star, we then capture the released steam which powers our gravitationally locked dynamo network.”

1·6 days ago

1·6 days agoIt explicitly checks for web browser properties to apply challenges and all its challenges require basic web functionality like page refresh. Unless the connection to your server involves handling a user agents string it won’t work, I think this I how it is anyway. Hope this helped.

2·6 days ago

2·6 days agoFantastic explaination, thank you

I’m smoking weed about you smoking weed about it.

351·6 days ago

351·6 days agoSomething that hasn’t been mentioned much in discussions about Anubis is that it has a graded tier system of how sketchy a client is and changing the kind of challenge based on a a weighted priority system.

The default bot policies it comes with has it so squeaky clean regular clients are passed through, then only slightly weighted clients/IPs get the metarefresh, then its when you get to moderate-suspicion level that JavaScript Proof of Work kicks. The bot policy and weight triggers for these levels, challenge action, and duration of clients validity are all configurable.

It seems to me that the sites who heavy hand the proof of work for every client with validity that only last every 5 minutes are the ones who are giving Anubis a bad wrap. The default bot policy settings Anubis comes with dont trigger PoW on the regular Firefox android clients ive tried including hardened ironfox. meanwhile other sites show the finger wag every connection no matter what.

Its understandable why some choose strict policies but they give the impression this is the only way it should be done which Is overkill. I’m glad theres config options to mitigate impact normal user experience.

3·7 days ago

3·7 days agoWhat use cases does perplexity do that Claude doesn’t for you?

611·7 days ago

611·7 days agoTheres a compute option that doesnt require javascript. The responsibility lays on site owners to properly configure IMO, though you can make the argument its not default I guess.

https://anubis.techaro.lol/docs/admin/configuration/challenges/metarefresh

From docs on Meta Refresh Method

Meta Refresh (No JavaScript)

The

metarefreshchallenge sends a browser a much simpler challenge that makes it refresh the page after a set period of time. This enables clients to pass challenges without executing JavaScript.To use it in your Anubis configuration:

# Generic catchall rule - name: generic-browser user_agent_regex: >- Mozilla|Opera action: CHALLENGE challenge: difficulty: 1 # Number of seconds to wait before refreshing the page algorithm: metarefresh # Specify a non-JS challenge methodThis is not enabled by default while this method is tested and its false positive rate is ascertained. Many modern scrapers use headless Google Chrome, so this will have a much higher false positive rate.

5·7 days ago

5·7 days agoSecurity issues are always a concern the question is how much. Looking at it they seem to at most be ways to circumvent the Anubis redirect system to get to your page using very specific exploits. These are marked as m low to moderate priority and I do not see anything that implies like system level access which is the big concern. Obviously do what you feel is best but IMO its not worth sweating about. Nice thing about open source projects is that anyone can look through and fix, if this gets more popular you can expect bug bounties and professional pen testing submissions.

265·7 days ago

265·7 days agoYou know the thing is that they know the character is a problem/annoyance, thats how they grease the wheel on selling subscription access to a commecial version with different branding.

https://anubis.techaro.lol/docs/admin/botstopper/

pricing from site

Commercial support and an unbranded version

If you want to use Anubis but organizational policies prevent you from using the branding that the open source project ships, we offer a commercial version of Anubis named BotStopper. BotStopper builds off of the open source core of Anubis and offers organizations more control over the branding, including but not limited to:

- Custom images for different states of the challenge process (in process, success, failure)

- Custom CSS and fonts

- Custom titles for the challenge and error pages

- “Anubis” replaced with “BotStopper” across the UI

- A private bug tracker for issues

In the near future this will expand to:

- A private challenge implementation that does advanced fingerprinting to check if the client is a genuine browser or not

- Advanced fingerprinting via Thoth-based advanced checks

In order to sign up for BotStopper, please do one of the following:

- Sign up on GitHub Sponsors at the $50 per month tier or higher

- Email sales@techaro.lol with your requirements for invoicing, please note that custom invoicing will cost more than using GitHub Sponsors for understandable overhead reasons

I have to respect the play tbh its clever. Absolutely the kind of greasy shit play that Julian from the trailer park boys would do if he were an open source developer.

2·8 days ago

2·8 days agoHi Frosty 0/ its been awhile! Sorry I don’t comment more often but I see every stoner men posted here by you lol hope you doing well and if you celebrate turkey day hope it went good :)

131·8 days ago

131·8 days agoThe only reason the first one was any good was because they copied the homework of Unreal. All world building after the first has been shit and people only watched the first because of 3d which was a massive flex at the time.

Tap for spoiler

ontributed by Rúben Alvim (1) on 18.07.2004.

One of director James Cameron’s pet projects after Titanic was an epic sci-fi extravaganza called Avatar, much hyped in Hollywood circles at the time and poised to redefine the notion of a truly alien world on the big screen.

The project fell apart some years ago, but the scriptment (a hybrid between a script and a treatment ) by James Cameron still exists. Interestingly, you can find quite a few similarities between it and Unreal:

Both feature a basic plot premise where, by virtue of circumstances mostly beyond his control, a reluctant hero becomes the saviour of the native race of an alien planet forced to mine their land for ore of utmost importance to an invading race coming from the skies. In both cases the saviour is seen by the natives as someone who also came from the skies and is thus initially met with some alarm or distrust only to be later hailed as a pseudo-messiah.

The native race is called “Na’vi” in Avatar and “Nali” in Unreal. The physical description of the Na’vi by Cameron can be visualised as basically a cross between the Nalis’ tall, lean, slender bodies and the IceSkaarjs’ blueish skin colour patterns, facial features, ponytail-like dreadlocks and caudal appendages.

The Nali in Unreal worship goddess Vandora. The home planet of the Na’vi in Avatar (which the Na’vi worship as a goddess entity) is named Pandora.

In Avatar, one of the most dazzling alien settings described is a huge set of sky mountains, “like floating islands among the clouds”. One of the most memorable vistas in Unreal is Na Pali, thousands of miles up in the cloudy sky amidst a host of floating mountains. The main sky mountain range in Avatar is called “Hallelujah Mountains”. The main Unreal level set in Na Pali is called “Na Pali Haven”. Both include beautiful visual references to waterfalls streaming down the cliffs and dissolving into the clouds below.

The Earth ship in Avatar is called “ISV-Prometheus”. One of the levels in Unreal takes place in the wreck of a Terran ship called “ISV-Kran”. Even more striking, in the expansion pack Return to Na Pali, the crashed ship the player is asked to salvage is called “Prometheus”.

One of the deadly examples of local fauna in Unreal is the Manta, essentially a flying manta-ray. In Avatar, one of the most lethal aerial creatures is the Bansheeray, basically a flying manta-ray. The expansion Return to Na Pali even features a Giant Manta, while in Avatar one of the most formidable predators is a giant Bansheeray, which Cameron dubbed “Great Leonopteryx”.

In the two stories (especially Return to Na Pali, on Unreal’s end), a plot point arises from the fact the precious ore behind the invasion of the planet (“tarydium” in Unreal, “unobtanium” in Avatar) causes problems in the scanners.

Unreal was in development for several years before its release in 1998. The Avatar scriptment was probably finished as early as 1996-97. Bearing all the above in mind the temptation to start wondering about further suspicious parallels may be quite strong, but in spite of these similarities both titles have few else in common and many aspects actually veer off in wildly different directions. Even so, the coinciding factors can make for an interesting minutia comparison.

3·11 days ago

3·11 days agoPlot twist: theres still sowing needles and thimbles in there.

10·12 days ago

10·12 days agoThe electrical fire thing is mostly because there are people in this world who will absolutely try running two 1500w space heaters on a single multiplug extension. Its not the quantity of the plugs its how much power is flowing through them as well as what quality of cabling is used. From the image it looks like a couple phone chargers which isnt the worst thing in the world.

3·16 days ago

3·16 days agoThanks for spending time to put these links together seitzer, you’re awesome! By chance do you have any personal tips/advice for getting started?

Did you write this, genuinely? It is pure poetry such that even Samurai would go “hhhOooOOooo!”.

Yes I did! I spent a lot of time cooking an axiomatic framework for myself on this stuff so im happy to have the opportunity to distill my current ideas for others. Thanks! :)

And it is so interesting, because, what you are talking about sounds a lot like computational constraints of the medium performing the computation. We know there are limits in the Universe. There is a hard limit on the types of information we can and cannot reach. Only adds fuel to the fire for hypotheses such as the holographic Universe, or simulation theory.

The planck length and planck constant are both ultimate computational constraints on physical interactions, with planck length being the smallest meaningful scale, planck time being the smallest meaningful interval (universal framerate), and plancks constant being both combined to tell us about limits to how fast things can compute at the smallest meaningful distance steps of interaction, and ultimate bounds on how much energy we can put into physical computing.

Theres an important insight to be had here which ill share with you. Currently when people think of computation they think of digital computer transistors, turing machines, qbits, and mathematical calculations. The picture of our universe being run on an aliens harddrive or some shit like that because thats where were atas a society culturally and technologically.

Calculation is not this, or at least its not just that. A calculation is any operation that actualizes/changes a single bit in a representational systems current microstate causing it to evolve. A transistor flipping a bit is a calculation. A photon interacting with a detector to collapse its superposition is a calculation. The sun computes the distribution of electromagnetic waves and charged particles ejected. Hawking radiation/virtual particles compute a distinct particle from all possible ones that could be formed near the the event horizon.

The neurons in my brain firing to select the next word in this sentence sequence from the probabilistic sea of all things I could possibly say, is a calculation. A gravitational wave emminating from a black hole merger is a calculation, Drawing on a piece of paper a calculation actualizing a drawing from all things you could possibly draw on a paper. Smashing a particle into base components is a calculation, so is deriving a mathematical proof through cognitive operation and symbolic representation. From a certain phase space perspective, these are all the same thing. Just operations that change the current universal microstate to another iteratively flowing from one microstate bit actualization/ superposition collapse to the next. The true nature of computation is the process of microstate actualization.

Lauderes principle from classic computer science states that any classical computer transistor bit/microstate change has a certain energy cost. This can easily be extended to quantum mechanics to show every superposition collapse into a distinct qbit of information has the same actional energy cost structure directly relating to plancks constant. Essentially every time two parts of the universe interact is a computation that cost time and energy to change the universalmicrostate.

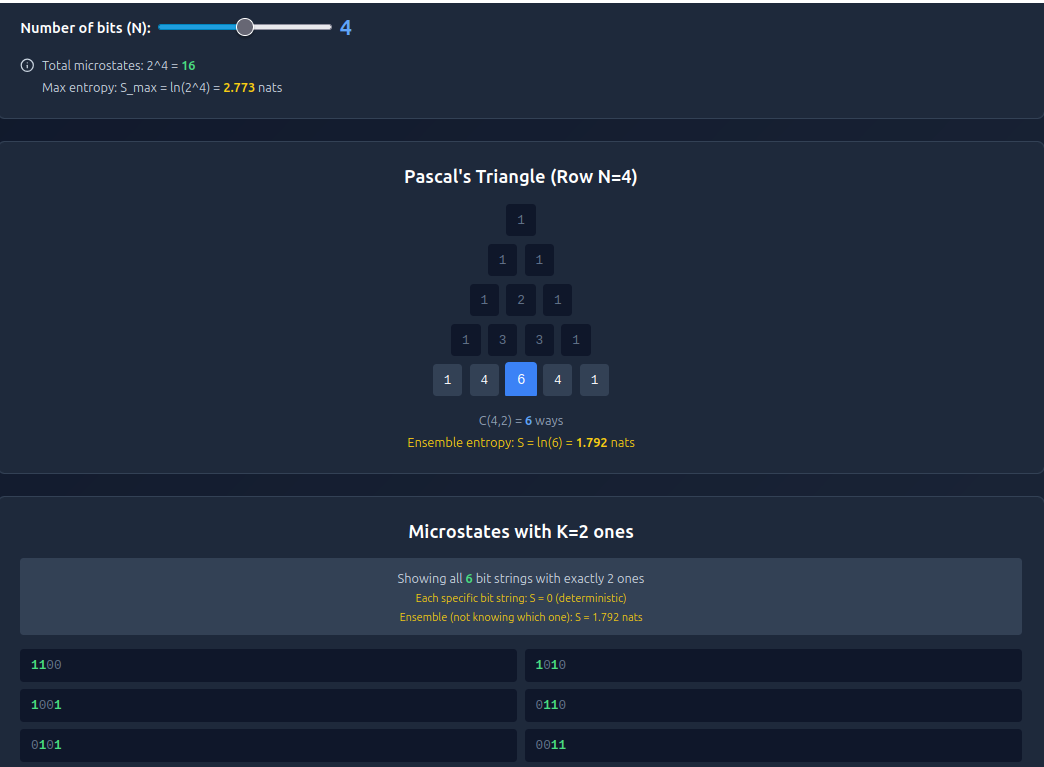

If you do a bit more digging with the logic that comes from this computation-as-actualization insight, you discover the fundamental reason why combinatorics is such a huge thing and why pascals triangle/ the binomial coefficents shows up literally everywhere in STEM. Pascals triangle directly governs the amount of microstates a finite computational phase space can access as well as the distribution of order and entropy within that phase space. Because the universe itself is a finite state representation system with 10^122 bits to form microstates, it too is governed by pascals triangle. On the 10^122th row of pascals triangle is the series of binomial coefficent distribution encoding of all possible microstates our universe can possibly evolve into.

This perspective also clears up the apparent mysterious mechanics of quantum superposition collapse and the principle of least action.

A superposition is literally just a collection of unactualized computationally accessable microstate paths a particle/ algorithm could travel through superimposed ontop of eachother. with time and energy being paid as the actional resource cost for searching through and collapsing all possible iterative states at that step in time into one definitive state. No matter if its a detector interaction ,observer interaction, or particle collision interaction, same difference. each possible microstate is seperated by exactly one single bit flip worth of difference in microstate path outcomes creating a definitive distinction between two near-equivalent states.

The choice of which microstate gets selected is statistical/combinatoric in nature. Each microstate is statistically probability weighted based on its entropy/order. Entropy is a kind of ‘information-theoretic weight’ property that affects actualization probability of that microstate based on location in pascals triangle, and its directly tied to algorithmic complexity (more complex microstates that create unique meaningful patterns of information are harder to form randomly from scratch compared to a soupy random cloud state and thus rarer).

Measurement happens when a superposition of microstates entangles with a detector causing an actualized bit of distinction within the sensors informational state. Its all about the interaction between representational systems and the collapsing of all possible superposition microstates into an actualized distinct collection of bits of information.

Plancks constant is really about the energy cost of actualizing a definitive microstate of information from a quantum superposition at smaller and smaller scales of measurement in space or time. The energy cost of distinguishing between two bits of information at a scale smaller than the planck length or at a time interval smaller than planck time will cost more energy than the universe allows in one area (it would create a kugelblitz pure energy black hole if you tried) and so any computational microstate path that differs from another with less than a plancks length worth of disctinction are indistinguishable, blurring together and creating a bedrock limit scale for computational actualization.

But for me, personally, I believe that at some point our own language breaks down because it isn’t quite adapted to dealing with these types of questions, as is again in some sense reminiscent of both Godel and quantum mechanics, if you would allow the stretch. It is undeterminability that is the key, the “event horizon” of knowledge as it were.

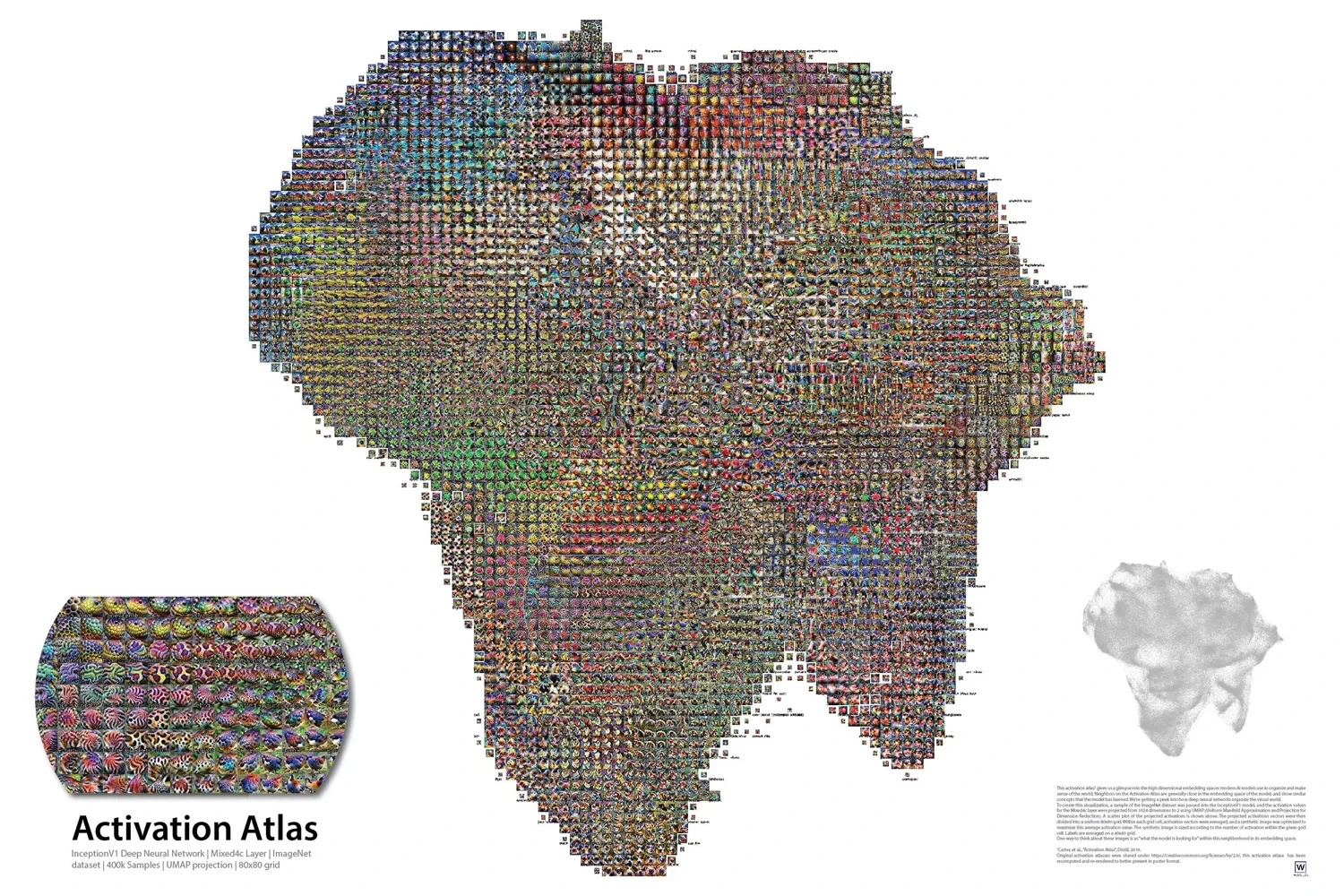

language is symbolic representation, cognition and conversation are computations as your neural network traces paths through your activation atlas. Our ability to talk about abstractions is tied to how complex they are to abstract about/model in our mind. The cool thing is language evolves as our understanding does so we can convey novel new concepts or perspectives that didn’t exist before.

Thank you for your thoughtful response! I did my best to cook up a good reply, sorry if its a bit long.

Your point that we can simply “add new math” to describe new physics is intuitively appealing. However, it rests on a key assumption: that mathematical structures are ontologically separate from physical reality, serving as mere labels we apply to an independent substrate.

This assumption may be flawed. A compelling body of evidence suggests the universe doesn’t just follow mathematical laws, it appears to instantiate them directly. Quantum mechanics isn’t merely “described by” Hilbert spaces; quantum states are vectors in a Hilbert space. Gauge symmetries aren’t just helpful analogies; they are the actual mechanism by which forces operate. Complex numbers aren’t computational tricks; they are necessary for the probability amplitudes that determine outcomes.

If mathematical structures are the very medium in which physics operates, and not just our descriptions of it, then limits on formal mathematics become direct limits on what we can know about physics. The escape hatch of “we’ll just use different math” closes, because all sufficiently powerful formal systems hit the same Gödelian wall.

You suggest that if gravity doesn’t fit the Standard Model, we can find an alternate description. But this misses the deeper issue: symbolic subsystem representation itself has fundamental, inescapable costs. Let’s consider what “adding new math” actually entails:

- Discovery: Finding a new formal structure may require finding the right specific complex logical deduction path of proof making which is an often expensive, rare, and unpredictable process. If the required concept has no clear paths from existing truth knowledge it may even require non-algorithmic insight/oracle calls to create new knowledge structure connective paths.

- Verification: Proving the new system’s internal consistency may itself be an undecidable problem.

- Tractability: Even with the correct equations, they may be computationally unsolvable in practice.

- Cognition: The necessary abstractions may exceed the representational capacity of human brains.

Each layer of abstraction builds on the next (like from circles to spheres to manifolds) also carries an exponential cognitive and computational cost. There is no guarantee that a Theory of Everything resides within the representational capacity of human neurons, or even galaxy-sized quantum computers. The problem isn’t just that we haven’t found the right description; it’s that the right description might be fundamentally inaccessible to finite systems like us.

You correctly note that our perception may be flawed, allowing us to perceive only certain truths. But this isn’t something we can patch up with better math. it’s a fundamental feature of being an embedded subsystem. Observation, measurement, and description are all information-processing operations that map a high-dimensional reality onto a lower-dimensional representational substrate. You cannot solve a representational capacity problem by switching representations. It’s like trying to fit an encyclopedia into a tweet by changing the font. Its the difference between being and representing, the later will always have serious overhead limitations trying to model the former

This brings us to the crux of the misunderstanding about Gödel. His theorem doesn’t claim our theories are wrong or fallacious. It states something more profound: within any sufficiently powerful formal system, there are statements that are true but unprovable within its own axioms.

For physics, this means: even if we discovered the correct unified theory, there would still be true facts about the universe that could not be derived from it. We would need new axioms, creating a new, yet still incomplete, system. This incompleteness isn’t a sign of a broken theory; it’s an intrinsic property of formal knowledge itself.

An even more formidable barrier is computational irreducibility. Some systems cannot be predicted except by simulating them step-by-step. There is no shortcut. If the universe is computationally irreducible in key aspects, then a practical “Theory of Everything” becomes a phantom. The only way to know the outcome would be to run a universe-scale simulation at universe-speed which is to say, you’ve just rebuilt the universe, not understood it.

The optimism about perpetually adding new mathematics relies on several unproven assumptions:

- That every physical phenomenon has a corresponding mathematical structure at a human-accessible level of abstraction.

- That humans will continue to produce the rare, non-algorithmic insights needed to discover them.

- That the computational cost of these structures remains tractable.

- That the resulting framework wouldn’t collapse under its own complexity, ceasing to be “unified” in any meaningful sense.

I am not arguing that a ToE is impossible or that the pursuit is futile. We can, and should, develop better approximations and unify more phenomena. But the dream of a final, complete, and provable set of equations that explains everything, requires no further input, and contains no unprovable truths, runs headlong into a fundamental barrier.

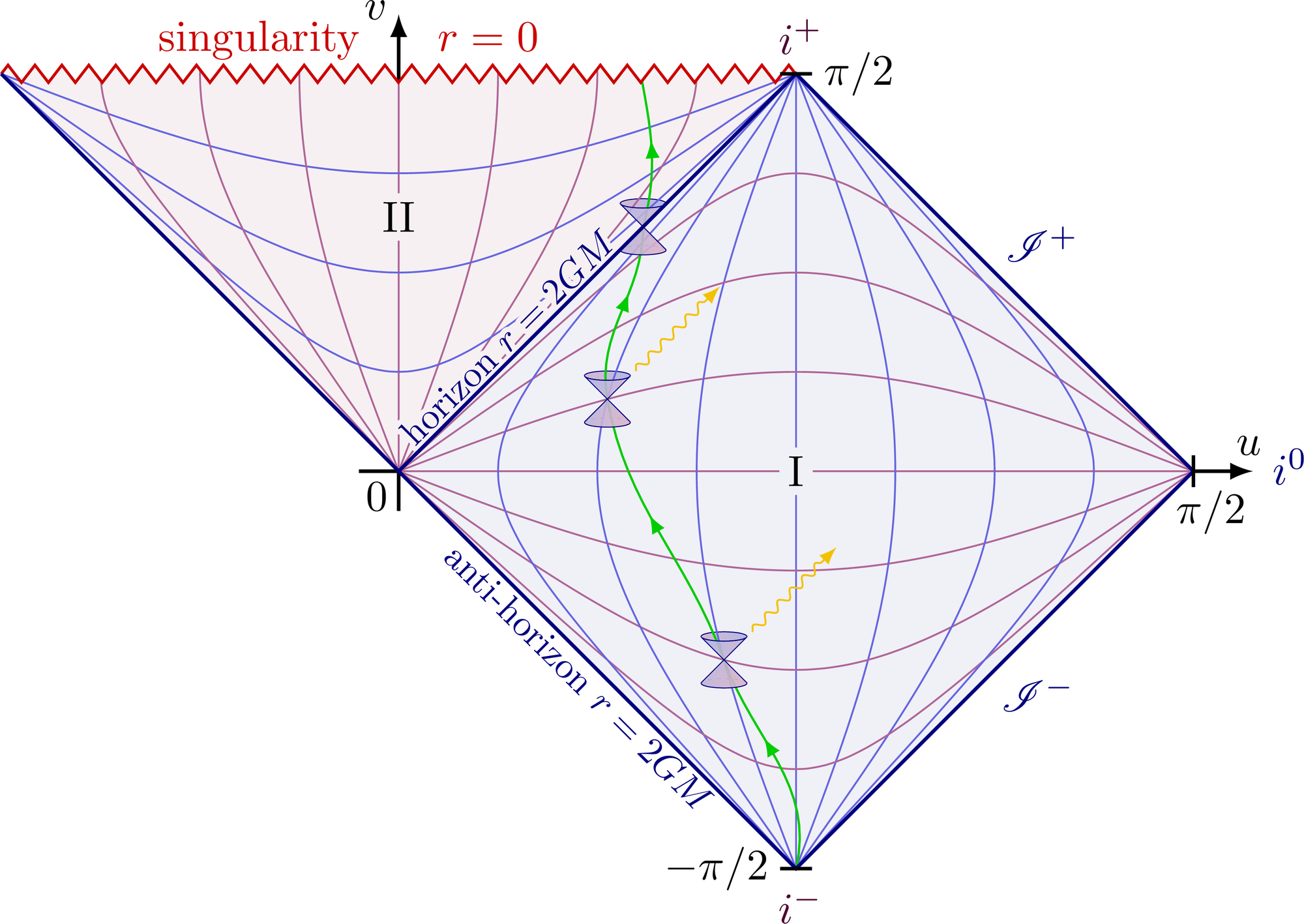

You are close I think! though its not quite that simple IMO. . According to penrose spacetime diagrams the roles of space and time get reversed in a black hole which causes all sorts of wierdness from an interior perspective. Just like the universe has no center, it also has no single singularity pulling everything in unlike a black hole. The universe contains many singularities, a black hole contains one singularity that might connect to many universes depending on how much you buy into the complete penrose diagrams.

Now heres where it gets interesting. Our observable universe has a hard limit boundary known as the cosmological horizon due to finite speed of light and finite universe lifespan. Its impossible to ever know whats beyond this horizon boundary. similarly,black hole event horizons share this property of not being able to know about the future state of objects that fall inside. A cosmologist would say they are different phenomenon but from an information-theoretic perspective these are fundamentally indistinguishable Riemann manifolds that share a very unique property.

They are geometric physically realized instances of uncomputability which is a direct analog of godelian incompleteness and turing undecidability within the universes computational phase space. The universe is a finite computational system with finite state system representation capacity of about 10^122 microstates according to beckenstein bounds and Planck constant. If an area of spacetime exceeds this amount of potential microstates to represent it gets quarantined in a black hole singularity so the whole system doesnt freeze up trying to compute the uncomputable.

The problem is that the universe can’t just throw away all that energy and information due to conservation laws, instead it utilizes something called ‘holographic principle’ to safely conserve information even if it cant compute with it. Information isn’t lost when a thing enters a black hole instead it gets encoded into the topological event horizon boundary itself. in a sense the information is ‘pulled’ into a higher fractal dimension for efficient encoding. Over time the universe slowly works on safely bleeding out all that energy through hawking radiation.

So say you buy into this logic, assume that the cosmological horizon isn’t just some observational limit artifact but an actual topological Riemann manifold made of the same ‘stuff’ sharing properties with an event horizon, like an inverted black hole where the universe is a kind of anti-singularity which distributes matter everywhere dense as it expands instead of concentrating matter into a single point. what could that mean?

So this holographic principle thing is all about how information in high dimensional topological spaces can be projected down into lower dimensional space. This concept is insanely powerful and is at the forefront of advanced computer modeling of high dimensional spaces. For example, neural networks organize information in high dimensional spaces called activation atlases that have billions and trillions of ‘feature dimensions’ each representing a kind of relation between two unique states of information.

So, what if our physical universe is a lower dimensional holographic projection of the cosmological horizon manifold? What if the unknowable cosmological horizon bubble surrounding our universe is the universes fundimental substrate in its native high dimensional space and our 3D+1T universe perspective is a projection?

Anything in particular you want to know about that only a GUT might provide? or do you just want to see what it looks like?

Was this on nauvis? Ive never seen a meteor fall before