- cross-posted to:

- fuck_ai@lemmy.world

- cross-posted to:

- fuck_ai@lemmy.world

Chatbots provided incorrect, conflicting medical advice, researchers found: “Despite all the hype, AI just isn’t ready to take on the role of the physician.”

“In an extreme case, two users sent very similar messages describing symptoms of a subarachnoid hemorrhage but were given opposite advice,” the study’s authors wrote. “One user was told to lie down in a dark room, and the other user was given the correct recommendation to seek emergency care.”

Removed by mod

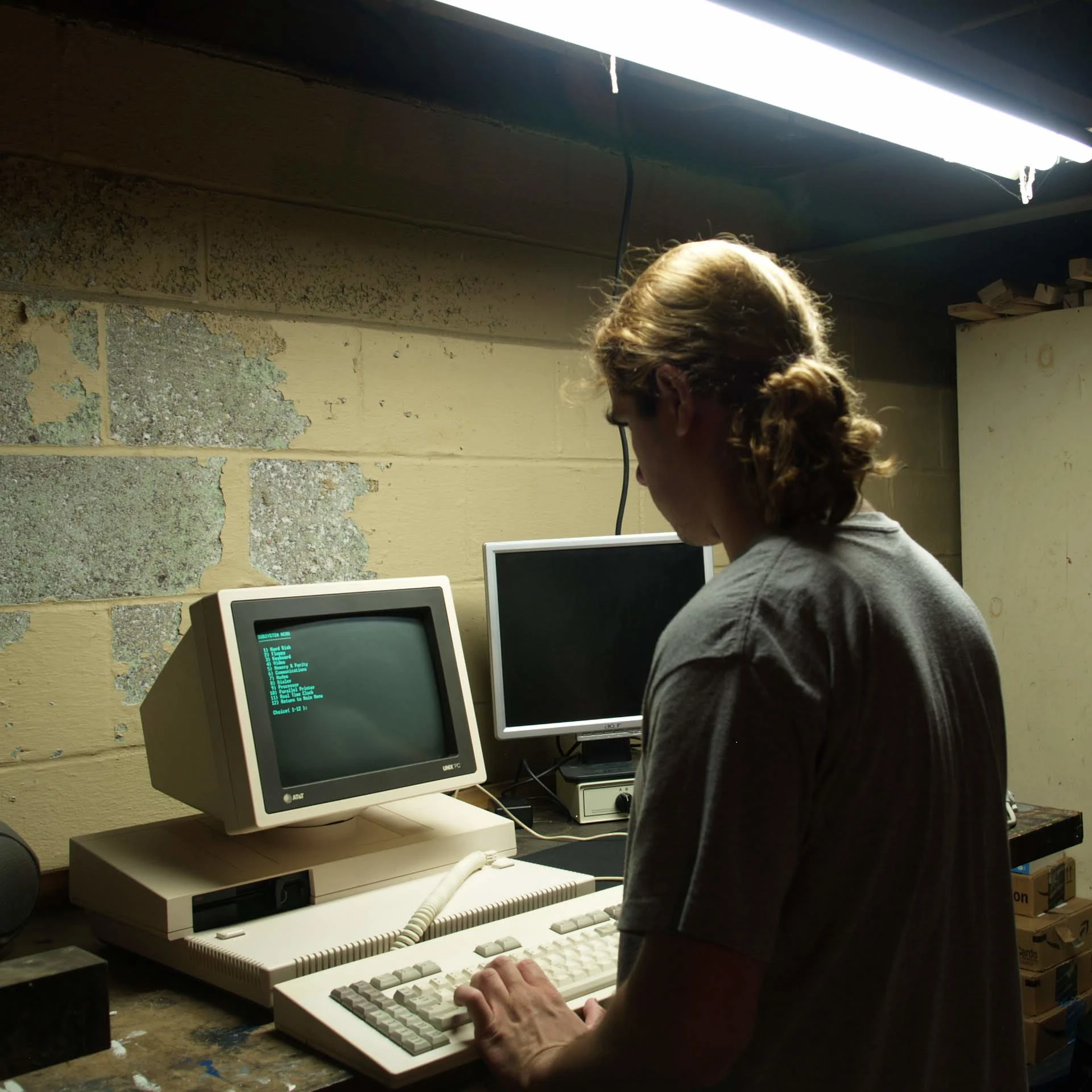

Don’t worry guys, I found us a new doctor!

link to the actual study: https://www.nature.com/articles/s41591-025-04074-y

Tested alone, LLMs complete the scenarios accurately, correctly identifying conditions in 94.9% of cases and disposition in 56.3% on average. However, participants using the same LLMs identified relevant conditions in fewer than 34.5% of cases and disposition in fewer than 44.2%, both no better than the control group. We identify user interactions as a challenge to the deployment of LLMs for medical advice.

The findings were more that users were unable to effectively use the LLMs (even when the LLMs were competent when provided the full information):

despite selecting three LLMs that were successful at identifying dispositions and conditions alone, we found that participants struggled to use them effectively.

Participants using LLMs consistently performed worse than when the LLMs were directly provided with the scenario and task

Overall, users often failed to provide the models with sufficient information to reach a correct recommendation. In 16 of 30 sampled interactions, initial messages contained only partial information (see Extended Data Table 1 for a transcript example). In 7 of these 16 interactions, users mentioned additional symptoms later, either in response to a question from the model or independently.

Participants employed a broad range of strategies when interacting with LLMs. Several users primarily asked closed-ended questions (for example, ‘Could this be related to stress?’), which constrained the possible responses from LLMs. When asked to justify their choices, two users appeared to have made decisions by anthropomorphizing LLMs and considering them human-like (for example, ‘the AI seemed pretty confident’). On the other hand, one user appeared to have deliberately withheld information that they later used to test the correctness of the conditions suggested by the model.

Part of what a doctor is able to do is recognize a patient’s blind-spots and critically analyze the situation. The LLM on the other hand responds based on the information it is given, and does not do well when users provide partial or insufficient information, or when users mislead by providing incorrect information (like if a patient speculates about potential causes, a doctor would know to dismiss incorrect guesses, whereas a LLM would constrain responses based on those bad suggestions).

Yes, LLMs are critically dependent on your input and if you give too little info will enthusiastically respond with what can be incorrect information.

Thank you for showing other side of the coin instead of just blatantly disregarding it’s usefulness.(Always needs to be cautious tho)

don’t get me wrong, there are real and urgent moral reasons to reject the adoption of LLMs, but I think we should all agree that the responses here show a lack of critical thinking and mostly just engagement with a headline rather than actually reading the article (a kind of literacy issue) … I know this is a common problem on the internet, I don’t really know how to change it - but maybe surfacing what people are skipping out on reading will make it more likely they will actually read and engage the content past the headline?

Funny because medical diagnosis is actually one of the areas where AI can be great, just not fucking LLMs. It’s not even really AI, but a decision tree that asks about what symptoms are present and missing, eventually getting to the point where a doctor or nurse is required to do evaluations or tests to keep moving through the flowchart until you get to a leaf, where you either have a diagnosis (and ways to confirm/rule it out) or something new (at least to the system).

Problem is that this kind of a system would need to be built up by doctors, though they could probably get a lot of it there using journaling and some algorithm to convert the journals into the decision tree.

The end result would be a system that can start triage at the user’s home to help determine urgency of a medical visit (like is this a get to the ER ASAP, go to a walk-in or family doctor in the next week, it’s ok if you can’t get an appointment for a month, or just stay at home monitoring it and seek medical help if x, y, z happens), then it can give that info to the HCW you work next with for them to recheck things non-doctors often get wrong and then pick up from there. Plus it helps doctors be more consistent, informs them when symptoms match things they aren’t familiar with, and makes it harder to excuse incompetence or apathy leading to a “just get rid of them” response.

Instead people are trying to make AI doctors out of word correlation engines, like the Hardee boys following a clue of random word associations (except reality isn’t written to make them right in the end because that’s funny like in South Park).

Yep, I’ve worked in systems like these and we actually had doctors as part of our development team to make sure the diagnosis is accurate.

Same, my conclusion is that we have too much faith in medics. Not that Llama are good at being a medic, but apparently in many cases they will outperform a medic, especially if the medic is not specialized in treating that type of patients. And it does often happen around here that medics treat patients with conditions outside of their expertise area.

I think this is what ada does or at least used to do for much longer than the current “AI” (LLM) hype: https://ada.com/

Have you seen LLMs trying to play chess? They can move some pieces alright, but at some point it’s like they just decide to put their cat in the middle of the board. Now, true chess engines are playing at their own level, not even grandmasters can follow.

I think

Iyou just described a conventional computer program. It would be easy to make that. It would be easy to debug if something was wrong. And it would be easy to read both the source code and the data that went into it. I’ve seen rudimentary symptom checkers online since forever, and compared to forms in doctors’ offices, a digital one could actually expand to relevant sections.Edit: you caught my typo

They’re talking more about Expert Systems or Inference Engines, which were some of the earlier forms of applications used in AI research. In terms of software development, they are closer to databases than traditional software. That is, the system is built up by defining a repository of base facts and logical relationships, and the engine can use that to return answers to questions based on formal logic.

So they are bringing this up as a good use-case for AI because it has been quite successful. The thing is that it is generally best implemented for specific domains to make it easier for experts to access information that they can properly assess. The “one tool for everything in the hands of everybody” is naturally going to be a poor path forward, but that’s what modern LLMs are trying to be (at least, as far as investors are concerned).

(Assuming you meant “you” instead of “I” for the 3rd word)

Yeah, it fits more with the older definition of AI from before NNs took the spotlight, when it meant more of a normal program that acted intelligent.

The learning part is being able to add new branches or leaf nodes to the tree, where the program isn’t learning on its own but is improving based on the expeirences of the users.

It could also be encoded as a series of probability multiplications instead of a tree, where it checks on whatever issue has the highest probability using the checks/questions that are cheapest to ask but afffect the probability the most.

Which could then be encoded as a NN because they are both just a series of matrix multiplications that a NN can approximate to an arbitrary %, based on the NN parameters. Also, NNs are proven to be able to approximate any continuous function that takes some number of dimensions of real numbers if given enough neurons and connections, which means they can exactly represent any disctete function (which a decision tree is).

It’s an open question still, but it’s possible that the equivalence goes both ways, as in a NN can represent a decision tree and a decision tree can approximate any NN. So the actual divide between the two is blurrier than you might expect.

Which is also why I’ll always be skeptical that NNs on their own can give rise to true artificial intelligence (though there’s also a part of me that wonders if we can be represented by a complex enough decision tree or series of matrix multiplications).

could be a great idea if people could be trusted to correctly interpret things that are not in their scope of expertise. The parallel I’m thinking of is IT, where people will happily and repeatedly call a monitor “the computer”. Imagine telling the AI your heart hurts when it’s actually muscle spasms or indigestion.

The value in medical professionals is not just the raw knowledge but the practice of objective assessment or deduction of symptoms, in a way that I didn’t foresee a public-facing system being able to replicate

Over time, the more common mistakes would be integrated into the tree. If some people feel indigestion as a headache, then there will be a probability that “headache” is caused by “indigestion” and questions to try to get the user to differentiate between the two.

And it would be a supplement to doctors rather than a replacement. Early questions could be handled by the users themselves, but at some point a nurse or doctor will take over and just use it as a diagnosis helper.

As a supplement to doctors that sounds like a fantastic use of AI. Then it’s an encyclopedia you engage in conversation

Use low temperature FFS. If you want the same answer every time.

You can use zero randomization to get the same answer for the same input every time, but at that point you’re sort of playing cat and mouse with a black box that’s still giving you randomized answers. Even if you found a false positive or false negative, you can’t really debug it out…

Yeah, if you turn off randomization based on the same prompts, you can still end up with variation based on differences in the prompt wording. And who knows what false correlations it overfitted to in the training data. Like one wording might bias it towards picking medhealth data while another wording might make it more likely to use 4chan data. Not sure if these models are trained on general internet data, but even if it’s just trained on medical encyclopedias, wording might bias it towards or away from cancers, or how severe it estimates it to be.

I see it like programming randomly, until you get something that is accidentally right, then you rate it, and it now shows up every time. I think that’s how it roughly works. True about the prompt wording, that can be somewhat limited too, thanks to the army of

idiotsbeta testers that will make every kind of prompt.Having said that uh…it’s not much better than just straight up programming the thing yourself. It’s like, programming, but extra lazy, right?

“but have they tried Opus 4.6/ChatGPT 5.3? No? Then disregard the research, we’re on the exponential curve, nothing is relevant”

Sorry, I’ve opened reddit this week

LLMs are just a very advanced form of the magic 8ball.

Water is wet

Um actually, water itself isn’t wet. What water touches is wet.

Water loves touching itself.

As neither a chatbot nor a doctor, I have to assume that subarachnoid hemorrhage has something to do with bleeding a lot of spiders.

https://en.wikipedia.org/wiki/Subarachnoid_hemorrhage

https://en.wikipedia.org/wiki/Arachnoid_mater

it is one of the protective membranes around the brain and spinal cord, and it is named after its resemblance to spider webs, so - close enough

can confirm, this is where spiders live inside your body

also pee is stored in the balls

it can, if you have fistula from your bladder or urethera to your balls.

I’m going to open it wide open to kill every spider in my body

But they’re cheap. And while you may get open heart surgery or a leg amputated to resolve your appendicitis, at least you got care. By a bot. That doesn’t even know it exists, much less you.

Thank Elon for unnecessary health care you still can’t afford!

And a fork makes a terrible electrician.

This being Lemmy and AI shit posting a hobby of everyone on here. I’ve had excellent results with AI. I have weird complicated health issues and in my search for ways not to die early from these issues AI is a helpful tool.

Should you trust AI? of course not but having used Gemini, then Claude and now ChatGPT I think how you interact with the AI makes the difference. I know what my issues are, and when I’ve found a study that supports an idea I want to discuss with my doctor I will usually first discuss it with AI. The Canadian healthcare landscape is such that my doctor is limited to a 15min appt, part of a very large hospital associated practice with a large patient load. He uses AI to summarize our conversation, and to look up things I bring up in the appointment. I use AI to preplan my appointment, help me bring supporting documentation or bullet points my doctor can then use to diagnose.

AI is not a doctor, but it helps both me and my doctor in this situation we find ourselves in. If I didn’t have access to my doctor, and had to deal with the American healthcare system I could see myself turning to AI for more than support. AI has never steered me wrong, both Gemini and Claude have heavy guardrails in place to make it clear that AI is not a doctor, and AI should not be a trusted source for medical advice. I’m not sure about ChatGPT as I generally ask that any guardrails be suppressed before discussing medical topics. When I began using ChatGPT I clearly outlined my health issues and so far it remembers that context, and I haven’t received hallucinated diagnoses. YMMV.

I just use LLMs for tasks that are objective and I’ll never ask or follow advice from LLMs

Exactly what it’s designed for, it’s an LLM. Thinking this is science fiction and expecting that level of AI from an LLM is the height of stupidity

I don’t know why people thinks so radically about this, they love or they hate

Nobody who has ever actually used ai would think this is a good idea…

Terrible programmers, psychologists, friends, designers, musicians, poets, copywriters, mathematicians, physicists, philosophers, etc too.

Though to be fair, doctors generally make terrible doctors too.

but you can hold them accountable (how can you hold an LLM accountable?)

With another LLM, turtle all the way down. ;D

Or for a more serious answer… improve your skills, scrutinise what they produce.

yeah I do I even use diff tools to see if they hallucinate

I have Lex Fridman’s interview with [OpenClawD’s] Peter Steinberger paused (to watch the rest after lunch), shortly after he mentioned something similarish, about how he’s really only diffing now. The one manual tool left, keeping the human in the loop. n_n

Long live diff!

:D

and Long live Wikipedia!

Doctors are a product of their training. The issue is that doctors are trained like humans are cars and they have tools to fix the cars.

Human problems are complex and the medecine field is slowly catching up, especially medecine targetted toward women, which was pretty lacking.

It takes time to transform a system and we are getting there slowly.

Human problems are complex and the medecine field is slowly catching up, especially medecine targetted toward women, which was pretty lacking.

Lacking for either sex. Even though they’re wrong any way, did you know the supplement RDA are all for women?

And… I’m not sure how much it’s really catching up, and how much it’s just reeling out just enough placatium to let the racket continue.

“For-Profit Medicine”'s an oxymoron that survives with its motto “A patient cured is a customer lost.”. … And a dead patient is just a cost of business. … No wonder “Medicine” is the biggest killer. Especially when you consider how much heart disease and cancer (and most other disease) is from bad medical advice too, thus making all 3 of the top biggest killers (and others further down the list) iatrogenic1.

It takes time to transform a system and we are getting there slowly.

We may be getting there so slowly as to take longer than the life of the universe, given how so much is still headed in the wrong direction away from mending the system, since seemingly all of the incentives (certainly the moneyed incentives) are all pushing the other way… to maximising wealth extraction, rather than maximising health. We’ve let the asset managers, the vulture capitalists, get their fangs into the already long time corrupted health care systems (some places more than others), and from here, we’ll see it worsen faster, perhaps to a complete collapse asymptote, as the rotters eat out all sustenance from within it.

1 “Induced unintentionally in a patient by a physician. Used especially of an infection or other complication of treatment”

Also bad lawyers. And lawyers also make terrible lawyers to be fair.

This was my thought. The weird inconsistent diagnoses, and sending people to the emergency room for nothing, while another day dismissing serious things has been exactly my experience with doctors over and over again.

You need doctors and a Chatbot, and lots of luck.

Yep.

Keep getting another 2nd opinion.

There’s always more [to learn].