You just fucking wait. Trump is bringing manufacturing to the US. And when that plant opens someday you’ll be so sorry you doubted.

I’m sure the foxconn plant in Wisconsin will fire up ANY DAY NOW! drums fingers

I talked to like 50 people today and all of the people said they were starting manufacturing plants tomorrow and they’ll be fully functional Tuesday around 3:15.

I started mine earlier and I’ve already done manufacturing 3 times today. It’s really easy. By this time tomorrow I’ll have a couple more and they’ll all be winning manufacturing.

Tariffs gave me the ability to finally believe in myself. Tariffs have increased my stamina in bed, given me a full head of hair again, and since I started manufacturing plant yesterday I’ve dropped 50 pounds.

Whatis?

I don’t remember the scene but it looks like memory from an Android. Not sparkly enough for star trek, maybe terminator 2?

Not possible.

Why? If they looked at how current tech works then they could easily develop the same tech 10000x faster

How

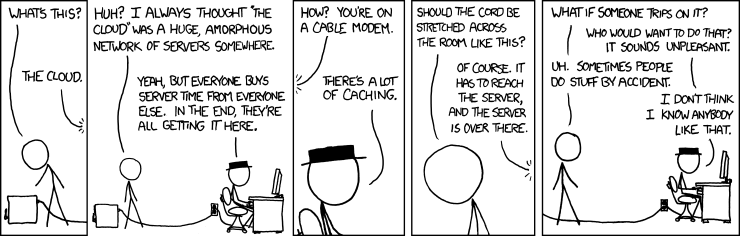

replace “the joke” with “irony” and then send the image to yourself

Holy shit I think with the joke, irony, and the two of you, I might be able to put some sort of perpetual motion machine together! Now I just need some investors…

Most Don’t Know This

and the circle will be complete

Easy, instead of developing the technology themselves they just copy it and claim they developed it 10000 times faster.

It’s a play on the original title.

No? Oh, that’s a shame. I was hoping for some improvement in the world, but a random person on the internet said it wasn’t possible without giving any reasons at all. Oh well.

No it’s literally impossible without bypassing the speed of light and/or the size of atoms.

This sounds like that material would be more useful in high performance radars, not as flash memory

It‘s likely BS anyway. Maybe it’s just me but reading about another crazy breakthrough from China every single day during this trade war smells fishy. Because I‘ve seen the exact same propaganda strategy during the pandemic when relations between China and the rest of the world weren‘t exactly the best. A lot of those headlines coming from there are just claims about flashy topics with very little substance or second guessing. And the papers releasing the stories aren‘t exactly the most renowned either.

It’s definitely possible they’re amplifying these developments to maintain confidence in the Chinese market, but I doubt they’re outright lying about the discoveries. I think it’s also likely that some of what they’ve been talking about has been in development for a while and that China is choosing now to make big reveals about them.

Whenever they say X whatever times, I doubt it right away, because they always interpret the statistics in the dumbest ways possible. You have a solar panel that is 28% efficient. There is no way it can be 20x times as efficient, that’s just clickbait.

trustworthiness = 1/(claimed improvement)

Yeah… At best click baity as fuck, at worst a complete scam.

Any time there is a 10x or more in a headline you are 10x or more likely to be right by calling it BS.

It’s like temu. 100x discount.

Is that fast enough to put an LLM in swap and have decent performance?

Note that this in theory speaks to performance of a non volatile memory. It does not speak to cost.

We already have a faster than NAND non volatile storage in phase change memory . It failed due to expense.

If this thing is significantly more expensive even than RAM, then it may fail even if it is everything it says it is. If it is at least as cheap as ram, it’ll be huge since it is faster than RAM and non volatile.

Swap is indicated by cost, not by non volatile characteristics.

Well, I’ll never see it, unless TI or another American company designs their own version.

Too bad the US can’t import any of it.

deleted by creator

“These chips are 10,000 times faster, therefore we will increase our tariffs to 10,100%!”

That was yesterday. It doubled since then IIRC

Wow, finally graphene has been cracked. Exciting times for portable low-energy computing

By tuning the “Gaussian length” of the channel, the team achieved two‑dimensional super‑injection, which is an effectively limitless charge surge into the storage layer that bypasses the classical injection bottleneck.

They’re just copying the description of the turbo encabulator.

They finally stole the French édriseur technology I see

Which episode of Star Trek is this from?

The one where there’s a problem with the holodeck.

Do you have any idea how little that narrows it down?

It’s the one where Barclay gets obsessed with his Holodeck program.

I don’t think so. I just rewatched it. It’s the one where Data finds out something to make himself more human. Picard tells him something profound and moving.

I think I saw that one. It’s the one where Ricker sits down on a chair like he’s mounting a small horse.

Maybe it’s the episode where Picard does this:

Clickbait article with some half truths. A discovery was made, it has little to do with Ai and real world applications will be much, MUCH more limited than what’s being talked about here, and will also likely still take years to come out

The key word is China, let us not kid ourselves. Otherwise it would be just another pop sci click but now it can be an ammunition in the fight with imperialist degenerated west or some bs like that

Heh?

Removed by mod

AI AI AI AI

Yawn

Wake me up if they figure out how to make this cheap enough to put in a normal person’s server.

You can get a Coral TPU for 40 bucks or so.

You can get an AMD APU with a NN-inference-optimized tile for under 200.

Training can be done with any relatively modern GPU, with varying efficiency and capacity depending on how much you want to spend.

What price point are you trying to hit?

What price point are you trying to hit?

With regards to AI?. None tbh.

With this super fast storage I have other cool ideas but I don’t think I can get enough bandwidth to saturate it.

With regards to AI?. None tbh.

TBH, that might be enough. Stuff like SDXL runs on 4G cards (the trick is using ComfyUI, like 5-10s/it), smaller LLMs reportedly too (haven’t tried, not interested). And the reason I’m eyeing a 9070 XT isn’t AI it’s finally upgrading my GPU, still would be a massive fucking boost for AI workloads.

You’re willing to pay $none to have hardware ML support for local training and inference?

Well, I’ll just say that you’re gonna get what you pay for.

No, I think they’re saying they’re not interested in ML/AI. They want this super fast memory available for regular servers for other use cases.

Precisely.

I have a hard time believing anybody wants AI. I mean, AI as it is being sold to them right now.

I mean the image generators can be cool and LLMs are great for bouncing ideas off them at 4 AM when everyone else is sleeping. But I can’t imagine paying for AI, don’t want it integrated into most products, or put a lot of effort into hosting a low parameter model that performs way worse than ChatGPT without a paid plan. So you’re exactly right, it’s not being sold to me in a way that I would want to pay for it, or invest in hardware resources to host better models.

I just use pre-made AI’s and write some detailed instructions for them, and then watch them churn out basic documents over hours…I need a better Laptop

deleted by creator

“Normal person” is a modifier of server. It does not state any expectation of every normal person having a server. Instead, it sets expectation that they are talking about servers owned by normal people. I have a server. I am norm… crap.

You… you don’t? Surely there’s some mistake, have you checked down the back of your cupboard? Sometimes they fall down there. Where else do you keep your internet?

Appologies, I’m tired and that made more sense in my head.

deleted by creator

Yeah, when you’re a technology enthusiast, it’s easy to forget that your average user doesn’t have a home server - perhaps they just have a NAS or two.

(Kidding aside, I wish more people had NAS boxes. It’s pretty disheartening to help someone find old media and they show a giant box of USB sticks and hard drives. In a good day. I do have a USB floppy drive and a DVD drive just in case.)

Hello fellow home labber! I have a home built xpenology box, proxmox server with a dozen vm’s, a hackentosh, and a workstation with 44 cores running linux. Oh, and a usb floppy drive. We are out here.

I also like long walks in Oblivion.

Man oblivion walks are the best until a crazy woman comes at you trying to steal your soul with a fancy sword

It’s pretty disheartening to help someone find old media and they show a giant box of USB sticks and hard drives.

Equally disheartening is knowing that both of those have a shelf-life. Old USB flash drives are more durable than the TLC/QLC cells we use today, but 15 years sitting unpowered in a box doesn’t have very good prospects.

lol yeah, the lemmy userbase is NOT an accurate sample of the technical aptitude of the general population 😂