And this is where I split with Lemmy.

There’s a very fragile, fleeting war between shitty, tech bro hyped (but bankrolled) corporate AI and locally runnable, openly licensed, practical tool models without nearly as much funding. Guess which one doesn’t care about breaking the law because everything is proprietary?

The “I don’t care how ethical you claim to be, fuck off” attitude is going to get us stuck with the former. It’s the same argument as Lemmy vs Reddit, compared to a “fuck anything like reddit, just stop using it” attitude.

What if it was just some modder trying a niche model/finetune to restore an old game, for free?

That’s a rhetorical question, as I’ve been there: A few years ago, I used ESRGAN finetunes to help restore a game and (seperately) a TV series. Used some open databases for data. Community loved it. I suggested an update in that same community (who apparently had no idea their beloved “remaster” involved oldschool “AI”), and got banned for the mere suggestion.

So yeah, I understand AI hate, oh do I. Keep shitting on Altman an AI bros. But anyone (like this guy) who wants to bury open weights AI: you are digging your own graves.

What if it was just some modder trying a niche model/finetune to restore an old game, for free?

Yeah? Well what if they got very similar results with traditional image processing filters? Still unethical?

The effect isn’t the important part.

If I smash a thousand orphan skulls against a house and wet it, it’ll have the same effect as a decent limewash. But people might have a problem with the sourcing of the orphan skulls.

It doesn’t matter if you’we just a wittle guwy that collects the dust from the big corporate orphan skull crusher and just add a few skulls of your own, or you are the big corporate skull crusher. Both are bad people despite producing the same result as a painter that sources normal limewash made out of limestone.

Even if all involved data is explicity public domain?

What if it’s not public data at all? Like artifical collections of pixels used to train some early upscaling models?

That’s what I was getting: some upscaling models are really old, used in standard production tools under the hood, and completely legally licensed. Where do you draw the line between ‘bad’ and ‘good’ AI?

Also I don’t get the analogy. I’m contributing nothing to big, enshittified models by doing hobbyist work, if anything it poisons them by making public data “inbred” if they want to crawl whatever gets posted.

Even if the data is “ethically sourced,” the energy consumption is still fucked.

The energy consumption of a single AI exchange is roughly on par with a single Google search back in 2009. Source. Was using Google search in 2009 unethical?

Total nonsense. ESRGAN was trained on potatoes, tons of research models are. I fintune models on my desktop for nickels of electricity; it never touches a cloud datacenter.

At the high end, if you look past bullshiters like Altman, models are dirt cheap to run and getting cheaper. If Bitnet takes off (and a 2B model was just released days ago), inference energy consumption will be basically free and on-device, like video encoding/decoding is now.

Again, I emphasize, its corporate bullshit giving everything a bad name.

Depends on what ur producing, running llama 3.1 locally on a raspberry pi doesnt produce any meaningful impact on the climate.

Dude. Read the room. You’re literally in a community called “Fuck AI” and arguing for AI. Are you masochistic or just plain dumb?

I’m trying to make the distinction between local models and corporate AI.

I think what people really hate is enshittification. They hate the shitty capitalism of unethical, inefficient, crappy, hype and buzzword-laden AI that’s shoved down everyone’s throats. They hate how giant companies are stealing from everyone with no repercussions to prop up their toxic systems, and I do too. It doesn’t have to be that way, but it will be if the “fuck AI” attitude like the one on that website is the prevalent one.

So it’s masochism. Got it. Hey, I’m not kink-shaming. I don’t get it, but you be you.

Just make a user interface for your game bro. No need to bring AI into it

Oh, so you deserve to use other people’s data for free, but Musk doesn’t? Fuck off with that one, buddy.

Using open datasets means using data people have made available publicly, for free, for any purpose. So using an AI based on that seems considerably more ethical.

Except gen AI didn’t exist when those people decided on their license. And besides which, it’s very difficult to specify “free to use, except in ways that undermine free access” in a license.

How does a model that is trained on an open dataset undermine free access? The dataset is still accessible no?

This specifically talks about AI data scrapers being an issue, and some general issues that are frankly not exclusive to open access info.

Exploitative companies are always a problem, whether it’s AI or not. But someone who uses the Wikipedia text torrents as a dataset isn’t doing anything of what is described in that article for example.

The responsibility is on the copyright holder to use a license they actually understand.

If you license your work with, say, the BSD 0 Clause, you are very explicitly giving away your right to dictate how other people use your work. Don’t be angry if people do so in ways you don’t like.

To be fair, he did say he “used some open databases for data”

Musk does too, if its openly licensed.

Big difference is:

-

X’s data crawlers don’t give a shit because all their work is closed source. And they have lawyers to just smash anyone that complains.

-

X intends to resell and make money off others’ work. My intent is free, transformative work I don’t make a penny off of, which is legally protected.

That’s another thing that worries me. All this is heading in a direction that will outlaw stuff like fanfics, game mods, fan art, anything “transformative” of an original work and used noncommercially, as pretty much any digital tool can be classified as “AI” in court.

-

deleted by creator

But if a tangent from the post, but you raise a valid point. Copying is not theft, I suppose piracy is a better term? Which on an individual level I am fully in support of, as are many authors, artists and content creators.

However, I think the differentiation occurs when pirated works are then directly profited off of — at least, that’s where I personally draw the distinction. Others may has their own red lines.

e.g. stealing a copy of a text book because you can’t otherwise afford is fine by me; but selling bootleg copies, or answer keys directly based off it wouldn’t be OK.

If your entire work is derived from other people’s works then I’m not okay with that. There’s a difference between influence and just plain reproduction. I also think the way we look at piracy from the consumer side and stealing from the creative side should be different. Downloading a port of a sega dreamcast game is not the same as taking someone else’s work and slapping your name on it.

ITT: People who didn’t check the community name

I mean it does show up on the feed as normal and sometimes people feel like it’s fine to give an differing perspective to such communities.

To be fair, I thought I blocked this community…

Sure… And you just had to reply with that info.

Oh boy here we go downvotes again

regardless o the model you’re using, the tech itself was developed and fine-tuned on stolen artwork with the sole purpose of replacing the artists who made it

that’s not how that works. You can train a model on licensed or open data and they didn’t make it to spite you even if a large group of grifters are but those aren’t the ones developing it

If you’re going to hate something at least base it on reality and try to avoid being so black-and-white about it.

I think his argument is that the models initially needed lots of data to verify and validate their current operation. Subsequent advances may have allowed those models to be created cleanly, but those advances relied on tainted data, thus making the advances themselves tainted.

I’m not sure I agree with that argument. It’s like saying that if you invented a cure for cancer that relied on morally bankrupt means you shouldn’t use that cure. I’d say that there should be a legal process involved against the person who did the illegal acts but once you have discovered something it stands on its own two feet. Perhaps there should be some kind of reparations however given to the people who were abused in that process.

I think his argument is that the models initially needed lots of data to verify and validate their current operation. Subsequent advances may have allowed those models to be created cleanly, but those advances relied on tainted data, thus making the advances themselves tainted.

It’s not true; you can just train a model from the ground up on properly licensed or open data, you don’t have to inherit anything. What you’re talking about is called finetuning which is where you “re-train” a model to do something specific because it’s much cheaper than training from the ground up.

I don’t think that’s what they are saying. It’s not that you can’t now, it’s that initially people did need to use a lot of data. Then they found tricks to improve training on less, but these tricks came about after people saw what was possible. Since they initially needed such data, their argument goes, and we wouldn’t have been able to improve upon the techniques if we didn’t know that huge neutral nets trained by lots of data were effective, then subsequent models are tainted by the original sin of requiring all this data.

As I said above, I don’t think that subsequent models are necessarily tainted, but I find it hard to argue with the fact that the original models did use data they shouldn’t have and that without it we wouldn’t be where we are today. Which seems unfair to the uncompensated humans who produced the data set.

I actually think it’s very interesting how nobody in this community seems to know or understand how these models work, or even vaguely follow the open source development of them. The first models didn’t have this problem, it was when OpenAI realized there was money to be made that they started scraping the internet and training illegally and consequently a billion other startups did the same because that’s how silicon valley operates.

This is not an issue of AI being bad, it’s an issue of capitalist incentive structures.

Cool! What’s the effective difference for my life that your insistence on nuance has brought? What’s the difference between a world where no one should have ai because the entirety of the tech is tainted with abuse and a world where no one should have ai because the entirety of the publicly available tech is tainted with abuse? What should I, a consumer, do? Don’t say 1000 hrs of research on every fucking jpg, you know that’s not the true answer just from a logistical standpoint

You CAN train a model on licensed or open data. But we all know they didn’t keep it to just that.

Yeah the corporations didn’t, that doesn’t mean you can’t and that people aren’t doing that.

… but that’s why people are against it.

Is everyone posting ghibli-style memes using ethical, licensed or open data models?

No, they’re using a corporate model that was trained unethically. I don’t see what your point is, though. That’s not inherent to how LLMs or other AIs work, that’s just corporations being leeches. In other words, business as usual in capitalist society.

You’re right about it not being inherent to the tech, and I sincerely apologize if I insist too much despite that. This will be my last reply to you. I hope I gave you something constructive to think about rather than just noise.

The issue, and my point, is that you’re defending a technicality that doesn’t matter in real world usage. Nearly no one uses non-corporate, ethical AI. Most organizations working with it aren’t starting from scratch because it’s disadvantageous or outright unfeasible resourcewise. Instead, they use pre-existing corporate models.

Edd may not be technically right, but he is practically right. The people he’s referring to are extremely unlikely to be using or creating completely ethical datasets/AI.

The issue, and my point, is that you’re defending a technicality that doesn’t matter in real world usage.

You’re right and I need to stop doing it. That’s a good reminder to go and enjoy the fresh spring air 😄

Name one that is “ethically” sourced.

And “open data” is a funny thing to say. Why is it open? Could it be open because people who made it didn’t expect it to be abused for ai? When a pornstar posted a nude picture online in 2010, do you think they thought of the idea that someone will use it to create deepfakes of random women? Please be honest. And yes, a picture might not actually be “open data” but it highlights the flaw in your reasoning. People don’t think about what could be done to their stuff in the future as much as they should but they certainly can’t predict the future.

Now ask yourself that same question with any profession. Please be honest and tell us, is that “open data” not just another way to abuse the good intentions of others?

Brother if someone made their nudes public domain then that’s on them

Wow, nevermind, this is way worse than your other comment. Victim blaming and equating the law to morality, name a more popular duo with AI bros.

I can’t make you understand more than you’re willing to understand. Works in the public domain are forfeited for eternity, you don’t get to come back in 10 years and go ‘well actually I take it back’. That’s not how licensing works. That’s not victim blaming, that’s telling you not to license your nudes in such a manner that people can use them freely.

The vast majority of people don’t think in legal terms, and it’s always possible for something to be both legal and immoral. See: slavery, the actions of the third reich, killing or bankrupting people by denying them health insurance… and so on.

There are teenagers, even children, who posted works which have been absorbed into AI training without their awareness or consent. Are literal children to blame for not understanding laws that companies would later abuse when they just wanted to share and participate in a community?

And AI companies aren’t using merely licensed material, they’re using everything they can get their hands on. If they’re pirating you bet your ass they’ll use your nudes if they find them, public domain or not. Revenge porn posted by an ex? Straight into the data.

So your argument is:

- It’s legal

But:

- What’s legal isn’t necessarily right

- You’re blaming children before companies

- AI makers actually use illegal methods, too

It’s closer to victim blaming than you think.

The law isn’t a reliable compass for what is or isn’t right. When the law is wrong, it should be changed. IP law is infamously broken in how it advantages and gets (ab)used by companies. For a few popular examples: see how youtube mediates between companies and creators, nintendo suing everyone they can (costs victims more than it does nintendo), everything disney did to IP legislation.

Okay but I wasn’t arguing morality or about children posting nudes of themselves. I’m just telling you that works submitted into the public domain can’t be retracted and there are models trained on exclusively open data, which a lot of AI haters don’t know, understand or won’t acknowledge. That’s all I’m saying. AI is not bad, corporations make it bad.

The law isn’t a reliable compass for what is or isn’t right.

Fuck yea it ain’t, I’m the biggest copyright and IP law hater on this platform and I’ll get ahead of the next 10 replies by saying no it’s not because I want to enable mindless corporate content scraping; it’s because human creativity shouldn’t not be boxed in. It should be shared freely, lest our culture be lost.

The existing models were all trained on stolen art

Corporate models, yes

Link to this noncorporate, ethically sourced ai plz because I’ve heard a lot but I’ve never seen it

I guess it is inevitable that self centred ego-stroking bubble communities appear in platforms such as Lemmy. Where reasoned polite discussion is discouraged and opposing opinions are drowned.

Well, I’ll just leave this comment here in the hope someone reads it and realises how bad these communities actually are. There’s a lot to hate about AI (specially companies dedicated to sell it), but not all is bad. As any technology, is about how you use it and this kind of community is all about cancelling everything and never advance and improve.

The way it’s being used sucks tho

Yeah, there’s legitimate complains against GAI and most of all the companies trying to lead it. But having a community where the only point of view accepted has to be direct and absolute hatred including towards people trying to look at adequate and ethical usage of the technology is just plainly bad and stupid just like any other social bubble.

Also these “Fuck AI” people are usually the biggest hypocrites, they use AI behind the scenes

Obviously not all AI is bad, but it’s clear the current way GenAI is being developed, and the most popular, mainstream options are unethical. Being against the unethical part requires taking a stand against the normalization and widespread usage of these tools without accountability.

You’re not the wise one amongst fools, you’re just being a jerk and annoying folks who see injustice and try to do something about it.

I guess it’s inevitable that self-centered, pseudo-intellectual individuals like you would appear in platforms such as Lemmy to ask for civility and attention while spouting bullshit.

Well the environmental costs are pretty high… One request to chatgpt 3.5 was guessed to be 100 times more expensive than a Google search request. Plus training.

This doesn’t change no matter the use case. Same with the copyright issues.

There is utility in ai. E.g. in medical stuff like detecting cancer.

Sadly, the most funded ai stuff is LLMs and image generation AIs. These are bad.

And a lot of ai stuff have major issue with racism.

“But ai has potential!!!” Yeah but it isn’t there and actively harms people. “But it could…” but it isn’t. Hilter could have fought against discrimination but sadly he chose the Holocaust and war. The potential of good is irrelevant to the reality of bad. People hate the reality of it and not the pipe dreams of it.

Hitler

Oh, you had to deliberately Godwin a perfectly good point. Take my upvote nevertheless 😂

Well I think it makes the point very clear.

oh, the luddites have their own instance now, huh? cat’s out of the bag, folks. deal with it.

Damn straight!

Rejecting the inevitable is dumb. You don’t have to like it but don’t let that hold you back on ethical grounds. Acknowledge, inform, prepare.

Wait, you don’t have to like it, but ethical reasons shouldn’t stop you?

They said the same thing about cloning technology. Human clones all around by 2015, it’s inevitable. Nuclear power is the tech of the future, worldwide adoption is inevitable. You’d be surprised by how many things declared “inevitable” never came to pass.

It’s already here dude. I’m using AI in my job (supplied by my employer) daily and it make me more efficient. You’re just grasping for straws to meet your preconceived ideas.

It’s already here dude.

Every 3D Tvs fan said the same. VR enthusiasts for two decades as well. Almost nothing, and most certainly no tech is inevitable.

The fact that you think these are even comparable shows how little you know about AI. This is the problem, your bias prevents you from keeping up to date in a field that’s moving fast af.

Sir, this is a Wendy’s. You personally attacking me doesn’t change the fact that AI is still not inevitable. The bubble is already deflating, the public has started to fall indifferent, even annoyed by it. Some places are already banning AI on a myriad of different reasons, one of them being how insecure it is to feed sensitive data to a black box. I used AI heavily and have read all the papers. LLMs are cool tech, machine learning is cool tech. They are not the brain rotted marketing that capitalists have been spewing like madmen. My workplace experimented with LLMs, management decided to ban them. Because they are insecure, they are awfully expensive and resource intensive, and they were making people less efficient at their work. If it works for you, cool, keep doing your thing. But it doesn’t mean it works for everyone, no tech is inevitable.

I’m also annoyed by how “in the face” it has been, but that’s just how marketing teams have used it as the hype train took off. I sure do hope it wanes, because I’m just as sick of the “ASI” psychos. It’s just a tool. A novel one, but a tool nonetheless.

What do you mean “black box”? If you mean [INSERT CLOUD LLM PROVIDER HERE] then yes. So don’t feed sensitive data into it then. It shouldn’t be in your codebase anyway.

Or run your own LLMs

Or run a proxy to sanitize the data locally on its way to a cloud provider

There are options, but it’s really cutting edge so I don’t blame most orgs for not having the appetite. The industry and surrounding markets need to mature still, but it’s starting.

Models are getting smaller and more intelligent, capable of running on consumer CPUs in some cases. They aren’t genius chat bots the marketing dept wants to sell you. It won’t mop your floors or take your kid to soccer practice, but applications can be built on top of them to produce impressive results. And we’re still so so early in this new tech. It exploded out of nowhere but the climb has been slow since then and AI companies are starting to shift to using the tool within new products instead of just dumping the tool into a chat.

I’m not saying jump in with both feet, but don’t bury your head in the sand. So many people are very reactionary against AI without bothering to be curious. I’m not saying it’ll be existential, but it’s not going away, I’m going to make sure me and my family are prepared for it, which means keeping myself informed and keeping my skillset relevant.

We had a custom made model, running on an data center behind proxy and encrypted connections. It was atrocious, no one ever knew what it was going to do, it spewed hallucinations like crazy, it was awfully expensive, it didn’t produce anything of use, it refused to answer shit it was trained to do and it randomly leaked sensitive data to the wrong users. It was not going to assist, much less replace any of us, not even in the next decade. Instead of falling for the sunken cost fallacy like most big corpos, we just had it shut down, told the vendor to erase the whole thing, we dumped the costs as R&D and we decided to keep doing our thing. Due to the nature of our sector, we are the biggest players and no competitor, no matter how advanced the AI they use will never ever get close to even touching us. But yet again, due to our sector, it doesn’t matter. Turns out AI is a hindrance and not an asset to us, thus is life.

Ai isn’t magic. It isn’t inevitable.

Make it illegal and the funding will dry up and it will mostly die. At least, it wouldn’t threaten the livelihood of millions of people after stealing their labor.

Am I promoting a ban? No. Ai has its use cases but is current LLM and image generation ai bs good? No, should it be banned? Probably.

Illegal globally? Unless there’s international cooperation, funding won’t dry up - it will just move.

That is such a disingeous argument. “Making murder illegal? People will just kill each other anyways, so why bother?”

This isn’t even close to what I was arguing. Like any major technology, all economically competitive countries are investing in its development. There are simply too many important applications to count. It’s a form of arms race. So the only way a country may see fit to ban its use in certain applications is if there are international agreements.

The concept that a snippet of code could be criminal is asinine. Hardly enforceable nevermind the 1st amendment issues.

You could say fascism is inevitable. Just look at the elections in Europe or the situation in the USA. Does that mean we cant complain about it? Does that mean we cant tell people fascism is bad?

No, but you should definitely accept the reality, inform yourself, and prepare for what’s to come.

You probably create AI slop and present it proudly to people.

AI should replace dumb monotonous shit, not creative arts.

I couldn’t care less about AI art. I use AI in my work every day in dev. The coworkers who are not embracing it are falling behind.

Edit: I keep my AI use and discoveries private, nobody needs to know how long (or little) it took me.

I use gpt to prototype out some Ansible code. I feel AI slop is just fine for that; and I can keep my brain freer of YAML and Ansible, which saves me from alcoholism and therapy later.

“i am fine with stolen labor because it wasn’t mine. My coworkers are falling behind because they have ethics and don’t suck corporate cock but instead understand the value in humanity and life itself.”

Lmao relax dude. It’s just software.

I couldn’t care less about AI art.

That’s what the OP is about, so…

Has AI made you unable to read?

The objections to AI image gens, training sets containing stolen data, etc. all apply to LLMs that provide coding help. AI web crawlers search through git repositories compiling massive training sets of code, to train LLMs.

Just because I don’t have a personal interest in AI art doesn’t mean I can’t have opinions.

But your opinion is off topic.

It’s all the same… Not sure why you’d have differing opinions between AI for code and AI for art, but please lmk, I’m curious.

Code and art are just different things.

Art is meant to be an expression of the self and a form of communication. It’s therapeutic, it’s liberating, it’s healthy and good. We make art to make and keep us human. Chatbot art doesn’t help us, and in fact it makes us worse - less human. You’re depriving yourself of enrichment when you use a chatbot for art.

Code is mechanical and functional, not really artistic. I suppose you can make artistic code, but coders aren’t doing that (maybe they should, maybe code should become art, but for now it isn’t and I think that’s a different conversation). They’re just using tools to perform a task. It was always soulless, so nothing is lost.

Then most likely you will start falling behind… perhaps in two years, as it won’t be as noticable quickly, but there will be an effect in the long term.

This is a myth pushed by the anti-ai crowd. I’m just as invested in my work as ever but I’m now far more efficient. In the professional world we have code reviews and unit tests to avoid mistakes, either from jr devs or hallucinating ai.

“Vibe coding” (which most people here seem to think is the only way) professionally is moronic for anything other than a quick proof of concept. It just doesn’t work.

I know senior devs who fell behind just because they use too much google.

This is demonstrably much worse.

Lmao the brain drain is real. Learning too much is now a bad thing

Removed by mod

Totally lost, can someone give me a tldr?

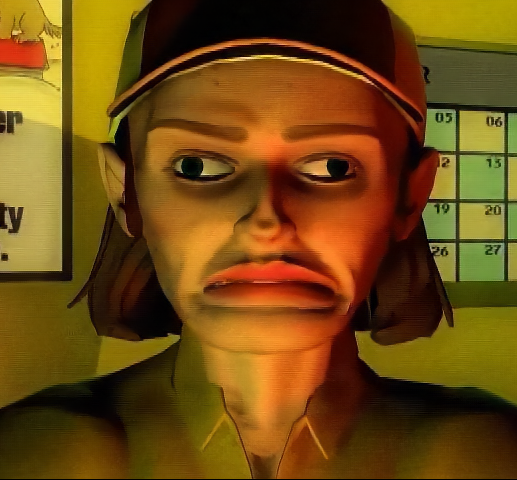

It’s a person who runs a database of game UI’s being contacted by people who want to train AI models on all of the data en masse.

The more I see dishonest, blindly reactionary rhetoric from anti-AI people - especially when that rhetoric is identical to classic RIAA brainrot - the more I warm up to (some) AI.

You won’t be the only one😉

Astroturfing. I don’t believe you.

Astroturfing? That implies I’m getting paid or compensated in any way, which I’m not. Does your commenting have anything to do with anything?

Astroturf is fake grass. Are you positive that guy in the picture is being paid? He might just be a proud boy.

Oh, I have no idea who anyone in the photo is. I’m just tired of all the dishonesty coming from both extremes around AI stuff.

I am a liar?

It is in fact the opposite of reactionary to not uncritically embrace your energy guzzling, disinformation spreading, proft-driven “AI”.

As much as you don’t care about language, it actually means something and you should take some time to look inwards, as you will notice who is the reactionary in this scenario.

“Disinformation spreading” is irrelevant in this discussion. LLM’s are a whole separate conversation. This is about image generators. And on that topic, you position the tech as “energy guzzling” when that’s not necessarily always the case, as people often show; and profit-driven, except what about the cases where it’s being used to contribute to the free information commons?

And lastly you’re leaving out the ableism in being blindly anti-AI. People with artistic skills are still at an advantage over people who either lack them, are too poor to hire skilled artists, and/or are literally disabled whether physically or cognitively. The fact is AI is allowing more people than ever to bring their ideas into reality, where otherwise they would have never been able to.

Listen, if you want to argue for facilitating image creation for people who aren’t skilled artists, I—and many more people—are willing to listen. But this change cannot be built on top of the exploitation of worldwide artists. That’s beyond disrespectful, it’s outright cruel.

I could talk about the other points you’re making, but if you were to remember one single thing from this conversation, please let it be this: supporting the AI trend as it is right now is hurting people. Talk to artists, to writers, even many programmers.

We can still build the tech ethically when the bubble pops, when we all get a moment to breathe, and talk about how to do it right, without Sam Altman and his million greedy investors trying to drive the zeitgeist for the benefit of their stocks, at the cost of real people.

There are literally image generation tools that are open-source. Even Krita has a plugin available. There are multiple datasets that can be trained on, other than the one that flagrantly infringed everyone’s copyrights, and there is no shortage of instructions online for how people can put together their own datasets for training. All of this can be run offline.

So no, anyone has everything they need to do this right, right now if they want to. Getting hysterical about it, and dishonestly claiming that it all “steals” from artists helps no one.

Yes, I like the unethical thing… but it’s the fault of people who are against it. You see, I thought they were annoying, and that justifies anything the other side does, really.

In my new podcast, I explain how I used this same approach to reimagine my stance on LGBT rights. You see, a person with the trans flag was mean to me on twitter, so I voted for—

Wow, using a marginalized group who are actively being persecuted as your mouthpiece, in a way that doesn’t make sense as an analogy. Attacking LGBTQI+ rights is unethical, period. Where your analogy falls apart is in categorically rejecting a broad suite of technologies as “unethical” even as plenty of people show plenty of examples of when that’s not the case. It’s like when people point to studies showing that sugar can sometimes be harmful and then saying, “See! Carbs are all bad!”

So thank you for exemplifying exactly the kind of dishonesty I’m talking about.

My comment is too short to fit the required nuance, but my point is clear, and it’s not that absurd false dichotomy. You said you’re warming up to some AI because of how some people criticize it. That shouldn’t be how a reasonable person decides whether something is OK or not. I just provided an example of how that doesn’t work.

If you want to talk about marginalized groups, I’m open to discussing how GenAI promotion and usage is massively harming creative workers worldwide—the work of which is often already considered lesser than that of their STEM peers—many of whom are part of that very marginalized group you’re defending.

Obviously not all AI, nor all GenAI, are bad. That said, current trends for GenAI are harmful, and if you promote them as they are, without accountability, or needlessly attack people trying to resist them and protect the victims, you’re not making things better.

I know that broken arguments of people who don’t understand all the details of the tech can get tiring. But at this stage, I’ll take someone who doesn’t entirely understand how AI works but wants to help protect people over someone who only cares about technology marching onwards, the people it’s hurting be dammed.

Hurt, desperate people lash out, sometimes wrongly. I think a more respectable attitude here would be helping steer their efforts, rather than diminishing them and attacking their integrity because you don’t like how they talk.

I’m sorry you’re not creative.

I’m certainly creative enough not to resort to childish ad hominems immediately.

Nah, you do so in the second comment here, like a properly lady. I can’t believe his lack of decorum. Is your sensitive soul ok?

Then there’s always that one guy who’s like “what about memes?”

MSpaint memes are waaaaay funnier than Ai memes, if only due to being a little bit ass.

Plus it let people with…let’s say no creative ability to speak of to be nice about it…make memes.

Anyone can have creative ability if they actually try though. And a lot of the low tech, low quality tools can produce some fun results if you embrace the restrictions.

Ass is the most important thing in a meme

EDIT: I feel like I could have written this better but I will stand by it nonetheless

Thank you for your service

It seems we’ve come full circle with “copying is not theft”… I have to admit I’m really not against the technology in general, but the people who are currently in control of it are all just the absolute worst people who are the least deserving of control over such a thing.

Is it hypocritical to think there should be rules for corporations that dont apply to real people? Like why is it the other way around and I can go to jail or get a fine for sharing the wrong files but some company does it and they just say its for the “common good” and they “couldnt make money if they had to follow the laws” and they get a fucking pass?

On the other hand, now I can download all the music I want because I’m training an AI. (Code still under development).

Yeah, I’ve been a pirate for so long I have zero moral grounds to be against using copyrighted stuff for free…

Except I’m not burning a small nations’ worth of energy to download a NoFX album and I’m not recreating that album and selling it to people when they ask for a copy of Heavy Petting Zoo (I’m just giving them the real songs). So, moral high ground regained?

I haven’t heard of this before, but it looks interesting for game devs.

Game UI Database: https://www.gameuidatabase.com/index.php

Me either. Seems like it would be a really handy site to use if you were making your own game and wanted to see some examples or best practices.

Had no idea it existed, I don’t make games though. But it’s always so cool to see something built that serves a unique niche I had never thought of before! Consulting used to be like for me but after a while, it was always the same kinds of business problems just a different flavor of organization.